How we challenge the Transformer

Having achieved remarkable successes in natural language and image processing, Transformers have finally found their way into the area of recommendation. Recently, researchers from NVIDIA and Facebook AI joined forces to introduce Transformer-based recommendation models described in their RecSys2021 publication Transformers4Rec: Bridging the Gap between NLP and Sequential / Session-Based Recommendation, obtaining new SOTA results on popular datasets. They experiment with various popular and successful Transformer models, such as GPT-2, Transformer-XL, and XLNet. To accompany the paper, they open-sourced their models in the Transformer4Rec library, which facilitates research on Transformer-based recommenders. This is a praiseworthy effort, and we congratulate them on the initiative! In these circumstances, we couldn’t help ourselves but check how our recommender architecture based on Cleora and EMDE is doing in comparison with NVIDIA/Facebook proposal.

Data

The Transformers4Rec library features 4 recommendation datasets: two from the e-commerce domain: REES46 and YOOCHOOSE, and two from the news domain: G1 and ADRESSA. In this article, we focus on the YOOCHOOSE e-commerce dataset as it’s closest to our business. YOOCHOOSE contains a collection of shopping sessions – sequences of user click events. The data comes from big e-commerce businesses from Europe and includes different types of products such as clothes, toys, tools, electronics, etc. Sessions were collected over six months. YOOCHOOSE is also known as RSC15 and was first introduced as a competition dataset in RecSys Challenge 2015.

In our evaluation we reuse a default data preprocessing scheme from the Transformers4Rec library. The dataset is divided into 182 consecutive days. The model trained on train data up to a given point in time can be tested and validated only on subsequent days.

Models

Transformer Model: the training objective of this attention-based architecture is inspired by language modeling paradigm. In language modeling, we predict the probability of words in a given sequence, which helps to create semantically rich text representations. In the e-commerce recommendation setting, the model learns the probability of an item occurrence at a given position in a sequence (user shopping session). Authors of Transformers4Rec tested multiple Transformer architectures and language modeling training techniques. We choose the best performing combination - XLNet with Masked Language Modeling objective. In MLM items in a session are randomly masked with a given probability and the model is trained to predict the masked items. The model has access to both past and future interactions in one session during the training phase.

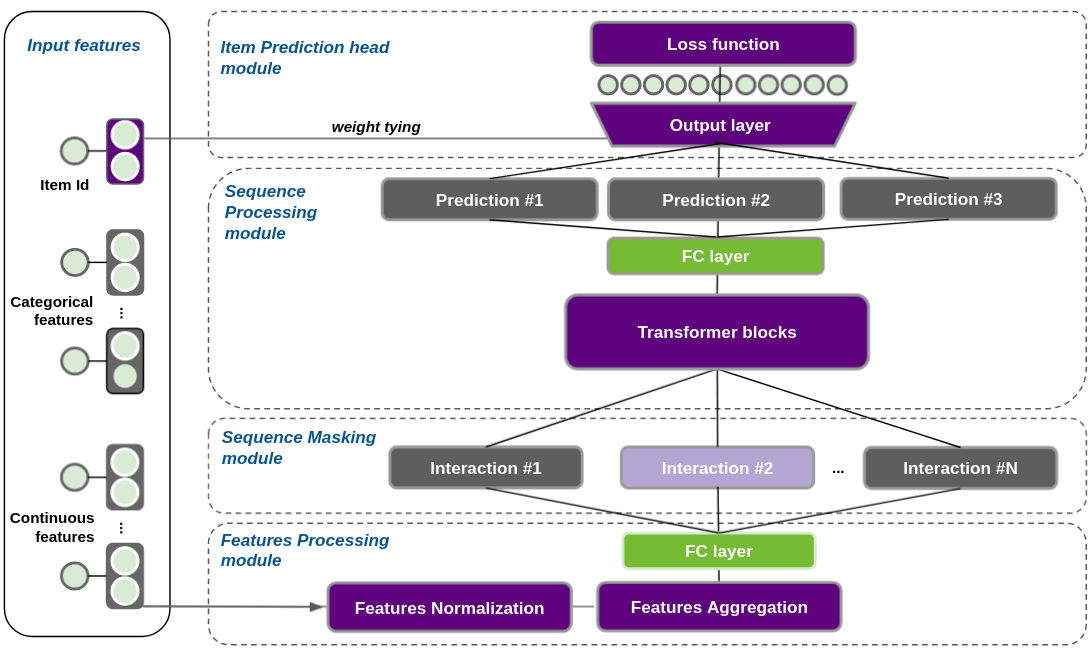

Cleora+EMDE Model: Here we apply extensive unsupervised feature engineering with our proprietary algorithms Cleora and EMDE. The obtained features are fed to a simple 4-layer feed-forward neural network.

First, item embeddings are created with Cleora. We interpret items as nodes in a hypergraph and we model each pair of items from the same session as connected with a hyperedge. In the next step, we use the EMDE algorithm to create an aggregated session representation. EMDE sketches are fine-grained and sparse representations of multidimensional feature spaces. Sketches aggregating items from a given session serve as an input to a simple feed forward NN and the output represents a ranking of items to be recommended. EMDE allows us to easily combine information from different modalities. In this case, we use this ability in order to combine sketches created from various Cleora embeddings generated with different sets of hyperparameters.

In the case of the Transformer, item representations are trained together with the whole model, whereas Cleora and EMDE algorithms are used to create universal aggregated session representations in a purely unsupervised way. Our feed-forward network is supervised, learning the mapping between input session and output items, both encoded with EMDE. As usual, we use a simple time decay of sketches to represent items sequentially. This is a marked difference from the Transformer, which learns to accurately represent information about item ordering in a session via positional encodings. On the other hand, EMDE focuses on explicitly modeling similarity relations in the feature space, facilitating downstream training and leveraging sparsity in models. In terms of model complexity, the difference between both neural models is enormous – the Transformer is one of the most complex and sophisticated architectures, while a feed forward neural network is the fastest and simplest possible neural model.

In the provided test set, it is possible to find items that did not appear in training. To resolve this issue, we generate item embeddings only based on items that were present in the training set. For evaluation, when creating input sketches we simply omit items that were not present during model training. For the Transformer-based architecture, embeddings for all items present in both train and test data were initialized as done in the original code. This approach assumes that the number of items is fixed and does not change over time. In the area of natural language processing, the problem of fixed vocabulary is commonly resolved by introducing sub-word tokenization. In the recommendation domain, this problem is still open.

Training Procedure

We train both models in the next-item prediction task setting. For an n-item session we use n-1 items for training and the n-th item is the output to be predicted. For simplicity, we use standard, non-incremental training and evaluation procedures. We train models on data from the first 150 days and evaluate on the next 30 days. We check how the results change for various test time windows. We created 3 test sets: the first one covers test data from one week following the training, the next test set covers two weeks, and the final set spans one month of targets (30 days). Daily model fine-tuning is often infeasible in production environments (especially with large datasets), that is why we decided to check how prediction quality changes over time.

In the case of the XLNet model, we followed the original experiment reproducibility instructions from here, with the set of hyperparameters finetuned by authors for YOOCHOOSE dataset, XLNet model with MLM training objective that can be found here.

Results

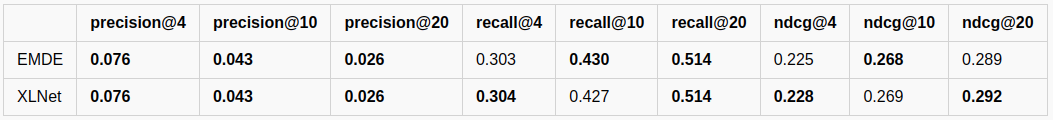

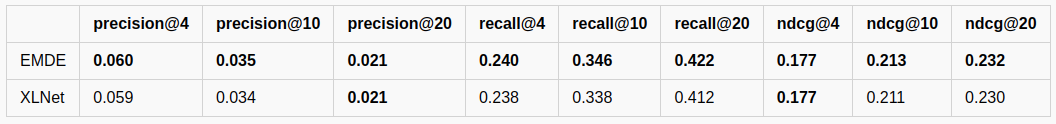

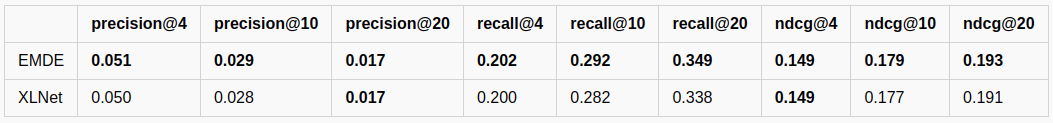

Despite a very simple architecture and short training times, EMDE is a strong competitor - outperforming XLNet on a variety of metrics.

It is evident that as the test time window size grows, performance decreases for both architectures. This can be explained by seasonality and other time-related phenomena, such as the appearance of new items on offer. EMDE seems to be more resilient to those changes, outperforming XLNet in almost all reported metrics in both 2 weeks and 30 days test settings.

One possible explanation of this fact may be that Transformer operates on temporal patterns and EMDE sketches focus on modeling spatial patterns of the embedding manifolds. Intuitively, it might be easier to distort a time-based pattern that flows in one direction, than a pattern spread over a multidimensional manifold. Thus, we suspect that unexpected session ordering or the appearance of unknown items, which get more frequent with time, may break the reasoning of Transformer models.

With the ability to create robust item and session representations, despite much simpler architecture, Cleora and EMDE can outperform SOTA Transformer-based solutions.